Let’s get one thing straight: If your company thinks it’s not using AI, it’s lying to itself. Or, more likely, its employees are lying to it. Welcome to the age of stealth AI—the corporate equivalent of sneaking contraband into a movie theater, except instead of Twizzlers it’s ChatGPT, and instead of a sticky floor, the risk is a multimillion-dollar data breach.

According to recent survey data, nearly 7 out of 10 employees say they’ve brought consumer-grade AI tools like ChatGPT or Gemini into their workflow, often without IT’s blessing. Over half of them admit to pasting sensitive information into those tools—think client info, product road maps, and the kind of stuff that usually requires two levels of approval. Basically everything your compliance officer has nightmares about. If you listen closely, you can hear the lawyers screaming into their pillows.

A POINT OF NO RETURN FOR EMPLOYEES

Why are employees doing this? Simple: It makes their jobs better. Eighty-three percent of employees say AI makes work more enjoyable, mostly because it handles the repetitive, mind-numbing tasks that sap your will to live. Drafting emails? Summarizing documents? Research rabbit holes? Offloaded to the bot, thank you very much. While AI verbiage might not be perfect, it’s a lot less susceptible to the easily avoided mistakes that humans make when working on mundane daily tasks.

Workers aren’t dumb. They’ve heard the mantra, that “It’s not that AI will steal your job, it’s that the person next to you using AI probably will.” Translation: If Bob in accounting is cranking out polished reports in half the time thanks to his secret AI sidekick, you’d better believe Susan is going to fire up her own ChatGPT tab.

In short, stealth AI isn’t about rebellion; it’s about staying competitive. Employees just want to look good, hit deadlines, and maybe snag that promotion. Security policies are just collateral damage.

DATA BREACHES WAITING TO HAPPEN

Of course, this all comes with more red flags than a clause that begins “notwithstanding the foregoing.” That data uploaded to a public chatbot isn’t vanishing; it’s sitting on a third-party server, potentially becoming future training material and possibly leaked in ways your legal department hasn’t even imagined yet. Samsung, for example, pulled the plug on ChatGPT usage after one of its engineers accidentally shared internal source code with it.

Naturally, companies have tried to fight stealth AI with policies. Some forbid pasting sensitive data into chatbots. Others restrict or outright ban ChatGPT at work. Reportedly, nearly 3% of companies even prohibit AI entirely. None of it works, because the benefits are too good. Employees say AI makes them faster, better, or happier at their jobs. Telling employees to ditch AI is like telling them to stop using Google in 2003.

Even when companies do provide sanctioned AI tools, employees often default back to the public ones they know best. Why wrestle with your clunky corporate chatbot when OpenAI’s shiny interface is one tab away?

THE REGULATORY SWORD OF DAMOCLES

Here’s where things get serious. If employees are sharing restricted customer information with AI providers, congratulations: You may have just violated a contract or a privacy law.

Data-protection authorities are increasingly focused on whether companies are letting sensitive information leak into systems they don’t control. All it takes is one employee pasting confidential details into the wrong chatbot, and suddenly your compliance program looks like Swiss cheese.

The smart companies aren’t banning stealth AI; they’re outcompeting it.

- Microsoft: Instead of waging war on employees sneaking into ChatGPT, Microsoft built AI directly into the apps people already use. Copilot now lives inside Word, Excel, and Outlook, making it both convenient and enterprise-compliant. The pitch is simple: Why risk leaking data to a random chatbot when you can get the same boost inside the software you’re already using?

- Salesforce: Marketing and sales teams live and die by speed. Salesforce saw the stealth AI trend early and embedded generative AI into its own CRM platform. Employees can now draft marketing copy, generate customer insights, and even brainstorm campaign ideas without resorting to unsanctioned tools.

- JPMorgan Chase: In finance, stealth AI could be catastrophic. One wrong paste and client financial data is floating around in someone else’s model. Rather than risk it, JPMorgan has been building secure, internal AI copilots trained on its own data. Employees still get the efficiency of AI, but the system is locked down with bank-grade compliance. The approach isn’t about saying no, but about saying “Here’s a safe way to do it.”

The lesson is clear: Make the safe option the easy option, and stealth AI starts to lose its appeal. The smart move isn’t prohibition; it’s integration. Build better tools, set smarter policies, and embrace AI as the productivity supercharger it is.

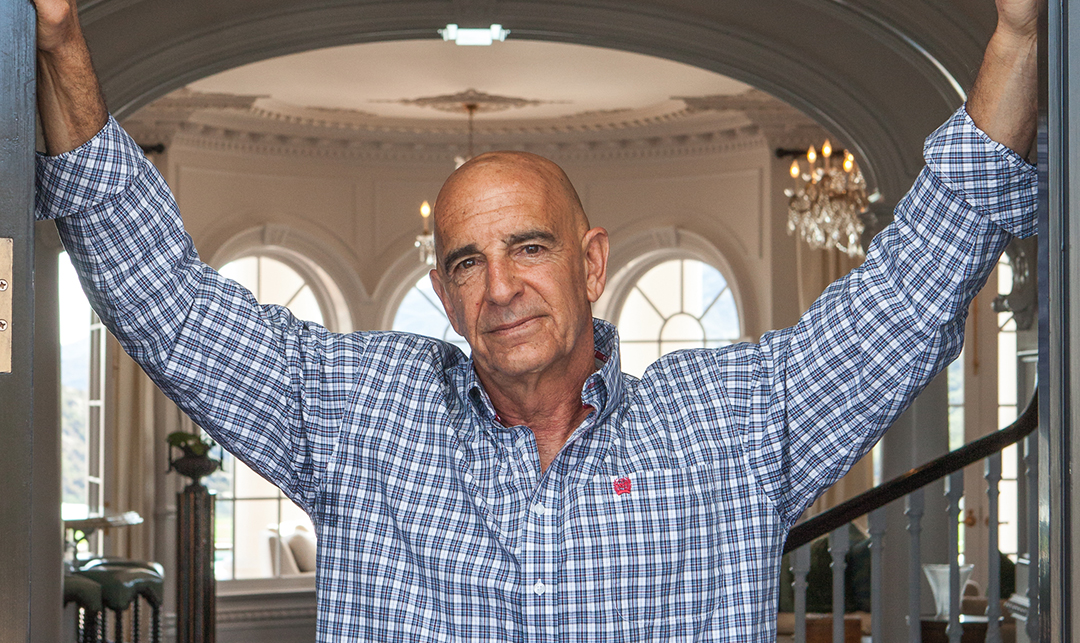

Rob Rosenberg is a partner at Moses Singer LLP, specializing in intellectual property, entertainment/media and technology, and AI and data law. He joined the firm in summer 2025 after a 22-year tenure at Showtime Networks, where he served as EVP and general counsel.