Driving 30 miles south from Salt Lake City, Utah, we reached Bluffdale and the area called Point of the Mountain—home to local Adobe, Microsoft, Oracle, and IBM offices—also known as Silicon Slopes. The snowcapped Wasatch Mountain Range rose above the valley to heights above 10,000 feet, home to ski and recreation areas like Alta, Snowbird, Brighton, and Solitude, beckoning the software elite who have come to this new hub of information technology.

Driving west into the desolate desert to Camp Williams was a stark contrast. Serving as the setting for the First Nations Montana reservation in the hit series Yellowstone, it is a secure Army Reserve base filled with warehouse-sized buildings that house one of the largest data centers in the world, the Utah Data Center, also known as the Intelligence Community Comprehensive National Cybersecurity Initiative Data Center, or the Bumblehive by Silicon Slopes techies.

The Utah Data Center. Image source: National Security Agency.

This data-storage facility for the US intelligence community is designed to store data estimated at over a zettabyte, though its actual capacity is classified. Its purpose is to support the Comprehensive National Cybersecurity Initiative. A zettabyte (ZB) is 1,000 exabytes (EB). Each EB is equal to 1,000 petabytes (PB). A PB is equal to 1,000 terabytes (TB). The average personal computer today has a capacity of 1 TB with a ZB of data representing 100 million personal computers’ data capacity.

Additionally, the total amount of data created, captured, copied, and consumed globally is forecasted to increase rapidly, from 64 ZB in 2020 to more than 180 ZB by 2025—a far cry from the 2 ZB created in 2010.

How Are Large Amounts of Data Managed?

The huge amount of data can only be searched by the powerful, water-cooled HPE/Cray supercomputers at the data center. All these systems require 65 megawatts of electricity, costing about $40 million per year. The facility is estimated to use 1.7 million gallons of water per day for cooling both the supercomputers and the servers that store the data on solid-state drives. Solid-state drives have no moving parts and use a fraction of the power and water for cooling that spinning-disk hard drives require.

The high-availability, fault-tolerant system has no single point of failure. Data is striped across virtual disk arrays and mirrored for instant failover.

Redundant ping, power, and pipe guarantee availability of the information to or from any device, with 256-bit advanced encryption standard (AES) on the fly and at rest, with permission, providing protection for all data. It would take hundreds of years for the mighty Cray system to break a single instance of 256 AES encryption.

The Southern end of the Utah Data Center, featuring the chiller plant and water tower. Image source: National Security Agency.

Diesel locomotive engines with fuel to run for a year or more ensure the power and water storage, both under and above ground, can provide cooling for a similar period of time. The buildings can be sealed from the outside air to prevent chemical attacks, insect swarms, and fire from reaching the data centers.

The National Security Agency (NSA) has several other data centers in Hawaii, Colorado, Texas, Georgia, and Maryland, all interconnected by dedicated fiberoptic lines in a single, secure data cloud.

Perspective on US Government Data Hosting

The Watchers: The Rise of America’s Surveillance State, by American journalist Shane Harris, published in 2010, details the people, contractors, and US government employees with high-level security clearances who work in these data centers to analyze the data.

In 2013, Edward Snowden, a former government contractor and a “watcher,” published TBs of NSA documents on the Internet, making the information available to anyone, friend or foe. Included in the trove of sensitive data were details about the Utah Data Center.

The US government got ahead of this data leak by publishing information on NSA sites, including one dedicated to the Utah Data Center. The web page details the systems and processes in place as of 2013. As public debate over privacy rights grows, the law and national security have intensified over the last nine years, fostering mistrust between tech companies, the government, and the people. Further details have been unavailable but we can estimate today’s capabilities based on what we know from the 2013 revelations and Moore’s Law, which states that processor speeds and, subsequently, data storage doubles every year.

In a 2013 interview for NPR’s All Things Considered, the NSA’s chief information officer, Lonny Anderson, explained the system in further detail: “We built it big because we could,” says Anderson, who runs the agency’s data acquisition, storage, and processing effort. “It’s a state-of-the-art facility. It’s the nicest data center in the US government—maybe one of the nicest data centers there is.”

It also gives the federal government’s intelligence agencies easier access to the email, text message, cell phone, and landline metadata the NSA collects.

Should We Be Worried?

“They are looking for particular words, particular names, particular phrases or numbers … information that’s on their target list,” says James Bamford, author of three books about the NSA and a 2012 Wired article focused on the new data center.

But Anderson asserts that there “is no intent here to become ‘Big Brother.’ … There’s no intent to watch American citizens. There is an attempt to ensure Americans are safe. And in some cases, the things you’ve read about the accesses we have [and] the capability we’ve developed are all about doing that.”

The Utah Data Center entrance quotes the “nothing to hide” argument. Image Source: National Security Agency.

He continues: “In the past, for every particular activity you needed, you created a repository to store that data. And it was very purpose-built to do ‘X,’ whatever ‘X’ was. The cloud enables us to … put that data together and make sense of it in a way that, in the past, we couldn’t do.”

There’s also a compliance component to the NSA’s cloud, Anderson says, in which the agency holds data—and restricts access—based on the requirements of federal law and the security clearances of the analysts seeking information. “As we ingest that data, we tag it in a certain way that ensures compliance,” he says.

Bamford says the Utah Data Center’s contribution to the cloud is basically to “provide storage and will be accessible via fiber-optic cables to much of the NSA’s infrastructure around the world.”

Where does the data come from? Data from satellites, undersea cables, routers, and networks is swept for any information the sophisticated spyware can glean. Intercepting internet traffic on undersea cables outside the US, as well as routers and switches, reaped a cornucopia of foreign and domestic data in the name of combatting terrorism and defending the United States.

Russia and China Host All Citizen Data

Russia is significantly restricting access to citizen and company data and hosting all data on government, GRU (Russian intelligence) servers. Unlike the US, people in Russia have no right to privacy. Even Facebook and Twitter accounts are hosted on government servers. LinkedIn refused this restriction and is banned in Russia as a result. The final amendments to Russia’s data-localization law went into effect September 1, 2015, and require all domestic and foreign companies to accumulate, store, and process personal information of Russian citizens on servers physically located within Russian borders. According to the law, any organization that stores the information of Russian nationals, whether customers or social media users, must move that data to Russian servers.

Compliance with the new legislation is strictly monitored and enforced by Russia’s Federal Service for Supervision of Communications, Information Technology, and Mass Media, known as Roskomnadzor. The commercial market for data centers in Russia has been growing at around 25% per year over the last five years. This growth is facilitated by both the cool climate, which reduces cooling costs, and changes to the country’s data protection law, which calls for the personal data of Russian citizens to be stored on servers located in Russia.

Chinese Data Centers

China has the biggest data center in the world and, experts assume, the most data, as it has over 1.4 billion people and these data centers, on behalf of the Chinese government, host all their data. There are also no privacy rights in China, preventing free speech and dissent.

The 10,763,910 sqft. Telecom data center in Hohhot, China. Image source: RankRed Media.

China’s People’s Republic Telecom has the world’s largest Internet data center and has secured over a 50% market share in the Chinese data-center market. It has an extensive global network of over 400 data centers located in prime regions in mainland China and overseas markets.

China will generate more data than the United States by 2025 as it pushes into new technologies such as the Internet of Things (IoT), according to a study from the International Data Corporation and data-storage firm Seagate.

In 2018, China generated about 7.6 ZB of data and that number will grow to 48.6 ZB in 2025. Meanwhile, the US generated about 6.9 ZB of data last year, predicted to be about 30 ZB in 2025.

While the US government backs up some of our data, citizens and companies are free to host their data on the platform of their choosing.

Takeaways

Data storage and privacy are becoming increasingly relevant. As all this data zips around the world, the US remains the world’s leader in protecting citizen rights and preventing the world’s threat actors from doing harm both here and abroad.

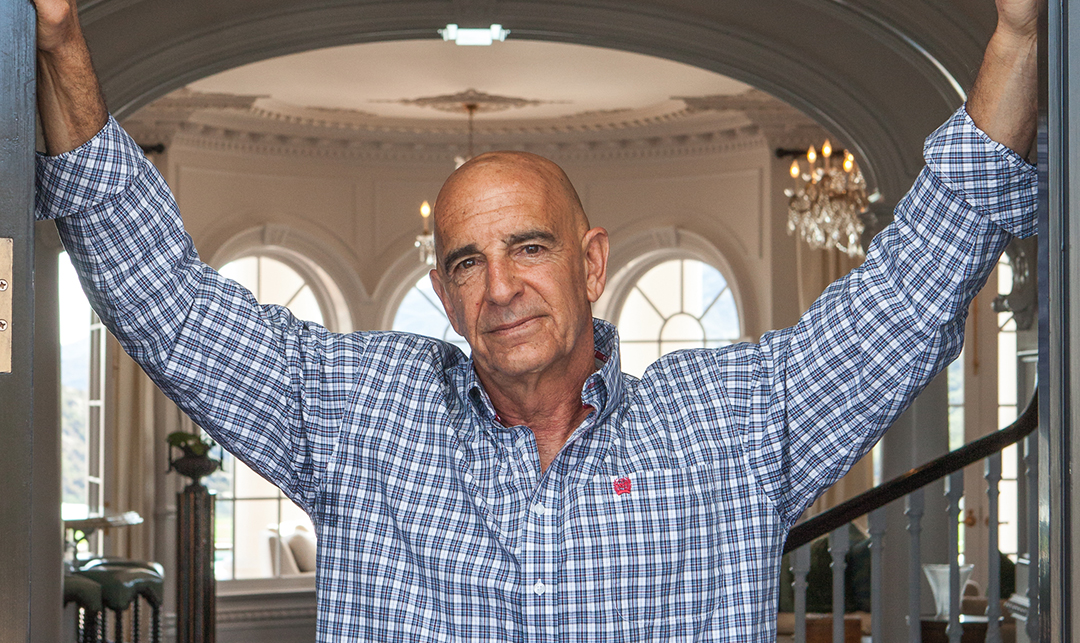

Throughout his career, Keith Gunther has held management positions at VERITAS/Symantec software as well as 3M, Fujifilm, Xerox and ARC.